In a previous post we illustrate how inheritance can help to refine the behavior of a particular case. In this post we’ll take a look at a different approach.

Composition over inheritance

In the Gang of 4 book, they advise to use composition over inheritance. Composition is a technique that breaks an object overall behavior in smaller objects each tasked with an aspect of it. This allows for better reuse and a more maintainable codebase.

Let’s see how it works.

Refactoring from an inheritance hierarchy to a composition model

Identify the aspects of the behavior

The first thing we’re going to do is to identify the steps of the behavior being overriden.

public virtual decimal CalcBonus(Vendor vendor)

{

decimal bonus = 0;

bonus = washMachineSellingBonus(vendor);

bonus += blnderSellingBonus(vendor);

bonus += stoveSellingBonus(vendor);

return bonus;

}

In this case these would be washMarchineSellingBonus, blenderSellingBonus and stoveSellingBonus. It’s worth mentioning that you’ll find code where the steps are not as clearly seen as in this example. Nevertheless, they’re still there. Every algorithm is just a bunch of steps in a certain order.

Create abstractions as needed

In our example the washMarchineSellingBonus, blenderSellingBonus and stoveSellingBonus are, as the name describes, bonuses. We can make this implicit abstraction explicit by creating an interface to represent it:

public interface Bonus

{

decimal Apply(Vendor vendor);

}

public class WashMachineSellingBonus:Bonus {…}

public class BlenderSellingBonus:Bonus {…}

public class StoveSellingBonus:Bonus {…}

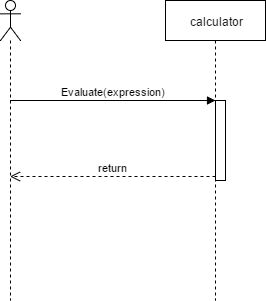

By doing this, we can have the calculator object with the responsibility to decide which bonuses will apply and keep track of the bonus amount while using the command pattern to contain each bonus calculation logic.

public class BonusCalculator()

{

List<Bonus> bonuses = new List<Bonus>();

public BonusCalculator()

{

bonuses.Add(new WashMachineSellingBonus());

bonuses.Add(new BlenderSellingBonus ());

bonuses.Add(new StoveSellingBonus ());

}

public decimal CalcBonus(Vendor vendor)

{

return bonuses.Sum(b=>b.Apply(vendor));

}

}

So far, so good. But what’s the real benefit of this?

Inject behavior at runtime

If we apply the Dependency Inversion Principle, something interesting happens.

Public class BonusCalculator()

{

List<Bonus> bonuses = new List<Bonus>();

public BonusCalculator(IEnumerable<Bonus> bonus)

{

bonuses.AddRange(bonus);

}

public decimal CalcBonus(Vendor vendor)

{

return bonuses.Sum(b=>b.Apply(vendor));

}

}

Now our BonusCalculator class becomes a mere container. This means that the behavior must be setup somewhere else. If needed the definition of the bonus calculator can now be hosted outside the code, like in a configuration file.

public class BonusCalculatorFactory()

{

public BonusCalculator GetBonusCalculator(string region)

{

//Lookup the configuration file or a database or webservice and get the Bonuses

that apply to this particular region

}

}

The idea here is that you can now modify the behavior of the BonusCalculator without the need of a hierarchy tree.

Advantages over inheritance

The main advantages of using composition are

- Changing behavior can be done at runtime without a need to recompile the code.

- You don’t have the fragile base class problem anymore.

- You can easily add new behaviors (in the example just implement the Bonus interface)

- You can compose behaviors to create more complex ones

Let’s take a quick look at this last point.

Mix and match to create new behavior

Let’s create a composed bonus object. We can reuse the template functionality from the bonus calculator.

public class ExtraBonus(): BonusCalculator, Bonus

{

public decimal Apply(Vendor vendor)

{

decimal theBonus = CalcBonus(vendor);

return theBonus > 2000? theBonus * 1.10 : theBonus;

}

}

Final thoughts

One of the things that I like about using composition, is that it forces you to decompose a problem to its simplest abstraction, allowing you to use this as a building block to create complex behavior and great flexibility at runtime. Now is time for a revelation: the actual text in the GoF book reads

Favor object composition over class inheritance

It’s not my intention to explain the reasons behind this principle on this post, but the hint is on the words object and class. Think about it and let me know your observations.