Encapsulation vs Information Hiding

Information hiding, as defined by Parnas, is the act of hiding design decisions from other modules, so no impact would be felt if you change such decisions. Encapsulation says nothing about such things. It only cares about grouping related things inside a capsule. It says nothing about the transparency level of such a capsule.

Encapsulation vs access modifiers

Oftentimes I’m asked to interview a candidate to see if he is suitable for a software development position. And 9 of 10 will refer to access modifiers. They will talk about public, private and other keywords, and access levels. Actually, access modifiers are more related to a concept called data hiding.

Encapsulation vs Data Hiding

If you are reading this, chances are that you have already found and read other articles related to the topic. And probably you found a reference to data hiding, saying that encapsulation is a way to achieve data hiding or something along those lines. But that doesn’t really help you have a clearer picture right?

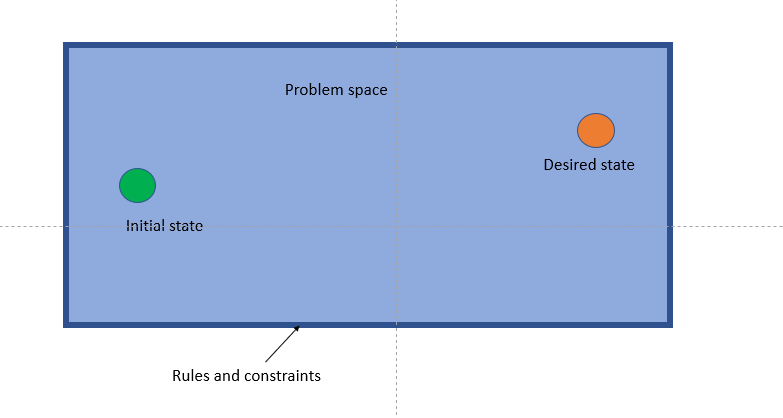

Let’s try to look at it from another angle. What is data hiding about? As the name implies is hiding data from the world. Hiding it how? Well, you hide it behind a boundary. Inside that boundary, a piece of data is well known. But outside of it, it’s non-existent. So data hiding is all about, well, data.

This raises a question: how do you define a boundary for a piece of data?

Encapsulation and Cohesiveness

Well, there’s a well-known principle for creating boundaries: cohesiveness. Cohesiveness is about putting together all things related in some way. The “some way” part of it, can be changing depending on the scope but is usually about behavior.

It means to take the data and the operations that work upon it and draw a boundary around them. Sounds familiar?

So what’s encapsulation?

According to Vladimir Khorikov:

Encapsulation is the act of protecting against data inconsistency

Both data hiding and cohesiveness are guides we use to avoid ending in an inconsistent state. First, you put a boundary around data and the operations that act upon it, and then you step into the data/information hiding domain by making the data invisible (at some level) outside that boundary.

Easy peasy, right? One interesting thing about encapsulation is that it results in abstractions all over the code.

Encapsulation and Abstraction

Abstraction and encapsulation share an intimate relationship.

Simply put, encapsulation leads to the discovering of new abstractions. This is the reason why refactoring works.

Let’s look at some definitions:

In computing, an abstraction layer or abstraction level is a way of hiding the working details of a subsystem, allowing the separation of concerns to facilitate interoperability and platform independence.

wikipedia

In other words, the level of abstraction is the opposite of the level of detail: the higher the abstraction level the lower the level of detail, and vice-versa.

The essence of abstraction is preserving information that is relevant in a given context, and forgetting information that is irrelevant in that context.

– John V. Guttag[1]

So an abstraction (noun) is just the representation of a concept in a way that is relevant to a given context (the abstraction level).

Which is precisely what we do when we encapsulate.

Encapsulation: First abstraction level

Look at the following code:

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

decimal total =0;

decimal tax = 0;

var order = new Order();

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Shampoo",Price = 12.95m}, Quantity = 2});

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Soap",Price = 8m}, Quantity = 5});

foreach(var line in order.Lines)

{

total += line.Quantity * line.Item.Price;

}

tax = total * 0.16m;

Console.WriteLine( total + tax);

}

}

public class Order

{

public Order(){

Lines = new List<OrderLine>();

}

public List<OrderLine> Lines{get;set;}

}

public class OrderLine

{

public Item Item{get;set;}

public int Quantity {get;set;}

}

public class Item {

public string Name {get;set;}

public decimal Price {get;set;}

}

Let’s try to draw some boundaries.

First, let’s look at the variables on the Main method. There are 3: order, total, and tax.

The trick here is to find where these variables are being used.

Let’s start with order.

So, in a nutshell, order is being used to calculate the value of total. Let’s take a look at that variable then.

Here we can see that the total is in reality a subtotal (before taxes) and it’s used to calculate both the tax and the real total which is implicit in the expression total + tax. So let’s fix that, let’s rename the total variable as subtotal, and let’s make the implicit real total explicit by assigning it to a variable called total.

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

decimal subtotal = 0;

decimal tax = 0;

var order = new Order();

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Shampoo",Price = 12.95m}, Quantity = 2});

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Soap",Price = 8m}, Quantity = 5});

foreach(var line in order.Lines)

{

subtotal += line.Quantity * line.Item.Price;

}

tax = subtotal * 0.16m;

var total = subtotal + tax;

Console.WriteLine( total );

}

}

Good, now let’s move to the next variable, tax.

As you can see tax is used to calculate the new total. Notice again that we have an implicit piece of data in there, 0.16m, so let’s make it explicit. Remember, you’re looking for data and code that acts upon that data, so try to make the data easy to spot. Let’s rename tax as taxes and put the tax percentage into a variable called tax.

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

decimal subtotal = 0;

decimal taxes = 0;

var order = new Order();

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Shampoo",Price = 12.95m}, Quantity = 2});

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Soap",Price = 8m}, Quantity = 5});

foreach(var line in order.Lines)

{

subtotal += line.Quantity * line.Item.Price;

}

var tax = 0.16m;

taxes = subtotal * tax;

var total = subtotal + taxes;

Console.WriteLine( total );

}

}

Mmmm… I think we can now encapsulate some things. First, that tax variable and the operation used to calculate the value of taxes belong together, so let’s draw a boundary around them.

At the lowest abstraction level, the encapsulation boundary is a function.

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

decimal subtotal = 0;

decimal taxes = 0;

var order = new Order();

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Shampoo",Price = 12.95m}, Quantity = 2});

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Soap",Price = 8m}, Quantity = 5});

foreach(var line in order.Lines)

{

subtotal += line.Quantity * line.Item.Price;

}

taxes = CalculateTaxes(subtotal);

var total = subtotal + taxes;

Console.WriteLine( total );

}

static decimal CalculateTaxes(decimal amount)

{

var tax = 0.16m;

return amount * tax;

}

}

Easy peasy, right? Let’s move backward and encapsulate the subtotal calculation.

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

decimal subtotal = 0;

decimal taxes = 0;

var order = new Order();

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Shampoo",Price = 12.95m}, Quantity = 2});

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Soap",Price = 8m}, Quantity = 5});

subtotal = CalculateSubtotal(order);

taxes = CalculateTaxes(subtotal);

var total = subtotal + taxes;

Console.WriteLine( total );

}

static decimal CalculateTaxes(decimal amount)

{

var tax = 0.16m;

return amount * tax;

}

static decimal CalculateSubtotal(Order order)

{

decimal subtotal = 0;

foreach(var line in order.Lines)

{

subtotal += line.Quantity * line.Item.Price;

}

return subtotal;

}

}

Something funny it’s going on. Why do we still have data (variables) laying around even after we encapsulate them? They should be non-existent to the world outside of the boundary right? Well, there are ways to go about this. First, since the taxes variable gets used only once, we can replace it with a call to the function.

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

decimal subtotal = 0;

var order = new Order();

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Shampoo",Price = 12.95m}, Quantity = 2});

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Soap",Price = 8m}, Quantity = 5});

subtotal = CalculateSubtotal(order);

var total = subtotal + CalculateTaxes(subtotal);

Console.WriteLine( total );

}

static decimal CalculateTaxes(decimal amount)

{

var tax = 0.16m;

return amount * tax;

}

static decimal CalculateSubtotal(Order order)

{

decimal subtotal = 0;

foreach(var line in order.Lines)

{

subtotal += line.Quantity * line.Item.Price;

}

return subtotal;

}

}

Now, let’s talk about the subtotal variable. This one is used several times so we can’t replace it as we did previously (we can but it will be recalculating the same value twice). But this should pique our interest. Whenever we find this situation it means there are operations related to this data and we need to further encapsulate! After looking carefully we notice we haven’t done anything about the total variable! Are there any operations related to it? Let’s pack’em up!

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

var order = new Order();

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Shampoo",Price = 12.95m}, Quantity = 2});

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Soap",Price = 8m}, Quantity = 5});

Console.WriteLine(CalculateTotal(order));

}

static decimal CalculateTaxes(decimal amount)

{

var tax = 0.16m;

return amount * tax;

}

static decimal CalculateSubtotal(Order order)

{

decimal subtotal = 0;

foreach(var line in order.Lines)

{

subtotal += line.Quantity * line.Item.Price;

}

return subtotal;

}

static decimal CalculateTotal(Order order)

{

decimal subtotal = 0;

subtotal = CalculateSubtotal(order);

var total = subtotal + CalculateTaxes(subtotal);

return total;

}

}

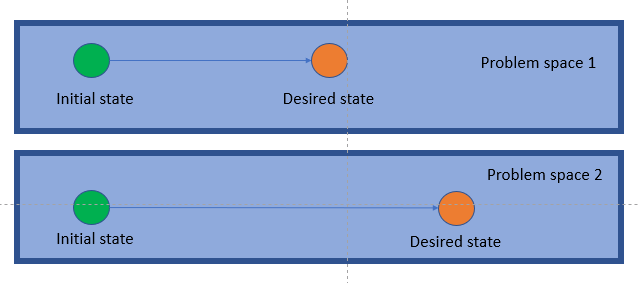

Let’s review this, now we have an order with some data and the display of the total amount of money to pay for such order. How the total is calculated is something irrelevant in this context. We switched from the how (lowest level of abstraction, high level of detail) to the what (higher abstraction level, lower level of detail). And this happens every time we encapsulate code.

Ok. Now we’re ready for the next step.

Encapsulation: Second abstraction level

If you have read up to this point, you must be tired. I know I am just from writing it. So let’s make this fast.

Let me ask you something. Do you see a piece of data and a piece of code that acts upon it? What’s that? correct, order! It’s just that this time we are dealing with a data structure, rather than primitives. When in this situation where you are using data from inside a data structure, encapsulating this behind a function won’t solve the problem. You need to turn the data structure into a full-fledge object by moving the functionality (behavior) into it.

At the second abstraction level, the encapsulation boundary is an object.

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

var order = new Order();

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Shampoo",Price = 12.95m}, Quantity = 2});

order.Lines.Add(new OrderLine{ Item = new Item{Name = "Soap",Price = 8m}, Quantity = 5});

Console.WriteLine(order.CalculateTotal());

}

}

public class Order

{

public Order(){

Lines = new List<OrderLine>();

}

public List<OrderLine> Lines{get;set;}

static decimal CalculateTaxes(decimal amount)

{

var tax = 0.16m;

return amount * tax;

}

decimal CalculateSubtotal()

{

decimal subtotal = 0;

foreach(var line in Lines)

{

subtotal += line.Quantity * line.Item.Price;

}

return subtotal;

}

public decimal CalculateTotal()

{

decimal subtotal = 0;

subtotal = CalculateSubtotal();

var total = subtotal + CalculateTaxes(subtotal);

return total;

}

}

public class OrderLine

{

public Item Item{get;set;}

public int Quantity {get;set;}

}

public class Item {

public string Name {get;set;}

public decimal Price {get;set;}

}

There you go! how’s that? Do you still see more members of the order being acted upon by any piece of code outside the order? of course, the Lines collection! The Main method is still manipulating the Lines collection of the order! Let’s fix that!

using System;

using System.Collections.Generic;

public class Test

{

public static void Main()

{

var order = new Order();

order.AddLine(new Item{Name = "Shampoo",Price = 12.95m}, 2);

order.AddLine(new Item{Name = "Soap",Price = 8m}, 5);

Console.WriteLine(order.CalculateTotal());

}

}

public class Order

{

public Order(){

Lines = new List<OrderLine>();

}

List<OrderLine> Lines{get;set;}

public void AddLine(Item item, int qty)

{

Lines.Add(new OrderLine {Item = item, Quantity = qty});

}

static decimal CalculateTaxes(decimal amount)

{

var tax = 0.16m;

return amount * tax;

}

decimal CalculateSubtotal()

{

decimal subtotal = 0;

foreach(var line in Lines)

{

subtotal += line.Quantity * line.Item.Price;

}

return subtotal;

}

public decimal CalculateTotal()

{

decimal subtotal = 0;

subtotal = CalculateSubtotal();

var total = subtotal + CalculateTaxes(subtotal);

return total;

}

}

public class OrderLine

{

public Item Item{get;set;}

public int Quantity {get;set;}

}

public class Item {

public string Name {get;set;}

public decimal Price {get;set;}

}

Voila! and while I was at it since no one else was playing around with the lines collection, I did some Data Hiding and made it private, so it became non-existent outside of the boundary it lives on, in this case, the object. What’s the point I can hear you say? You are just replacing a data member for a method member, so what? well, what if you wanted to change the Lines definition from List<OrderLine> to Dictionary<string, OrderLine> for performance reasons. How many places would you have to change before encapsulating that? how many after? And what if you wanted to add a validation to check that you are not inserting 2 lines with the same product and instead just increase the quantity?

By encapsulating the code, effectively raising the abstraction level, you start to deal with concepts in terms of what instead of how. And if you only show the what to the outside, you can always change the how on the inside.

Anyway, now let’s go back to the CalculateSubtotal function. Can you see a piece of data that is being used by a piece of code? 😉

So there you have it. This is becoming too long so I’ll wrap it up here. You can encapsulate forever at different abstraction levels: namespace, module, API, service, application, system, etc. It’s turtles all the way!

Meanwhile, I challenge you to try this on your own codebase. Let me know about your experience in the comments! Have a good day!

P.S. The code in this post is part of a challenge I put out for the devs in my current job. Wanna give it a try? you can find the it here https://github.com/unjoker/CoE_Challenge. Send me a PR to take a look at your solution 😉